The SafeToNet Foundation is a UK registered charity and is part of the SafeToNet safeguarding and wellbeing brand, which focusses on safeguarding children in the online digital context. At first blush this may seem a simple thing to achieve; after all there are “Parental Controls” that come with smartphones, all you have to do is turn them on and all’s well. Sadly, no. In fact, using these embedded “Parental Controls” won’t solve online harms such as cyberbullying, grooming, sextortion or the commercialisation of underage children. On the contrary, these “controls” are likely to make things worse, not better.

The Online Harms white paper stems from David Cameron’s original speech in July 2013 where he said “I want Britain to be the best place to raise a family; a place where your children are safe, where there’s a sense of right and wrong and proper boundaries between them, where children are allowed to be children.. And all the actions we’re taking today come back to that basic idea: protecting the most vulnerable in our society, protecting innocence, protecting childhood itself”. A laudable aim indeed.

Cameron saw two challenges: one being the proliferation of illegal material, specifically Child Sexual Abuse Material (CSAM), and the other being legal material (in this case “adult pornography”) being viewed by those people underage. Cameron focussed principally on the search engines in this speech, which when the entirety of the online digital context is considered, is a pretty limited view.

The Online Harms white paper evolved into much more than trying to make the “internet” (actually the World Wide Web) a safe space for children and has become a catch all for nearly everything and everyone.

Curiously it didn’t include one of the two challenges that Cameron clearly identified in his speech – that of children accessing otherwise legal but unsuitable content i.e. adult pornography. That was originally hived off into an “Age Verification” project under the Digital Economy Act 2017 that was abandoned amid some controversy just prior to the last General Election being announced.

Other than Age Verification, it swept up pretty much everything else for everybody. It didn’t identify children being a special case and this caused some commentators to say that the Government was trying to treat everyone as children, it was “dumbing everything down”. Others felt it would have been easier if the Online Harms white paper had focussed just on keeping children safe online, letting the adults behave however they want (within the context of existing legislation).

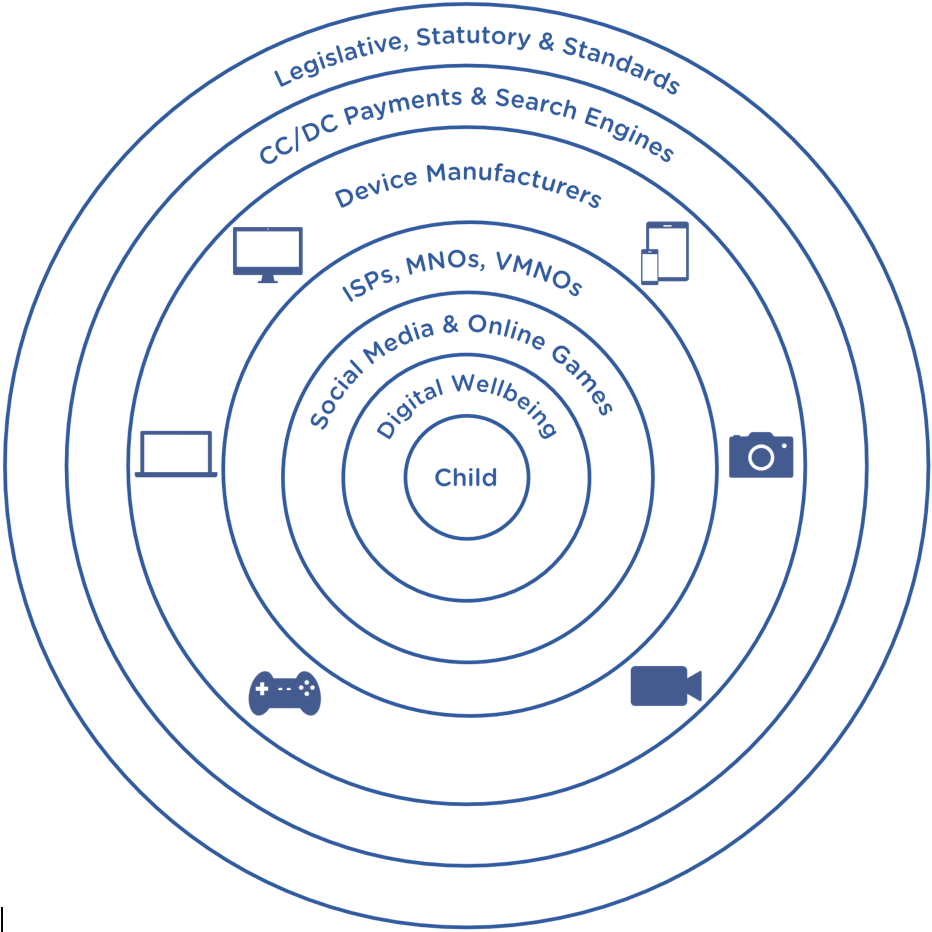

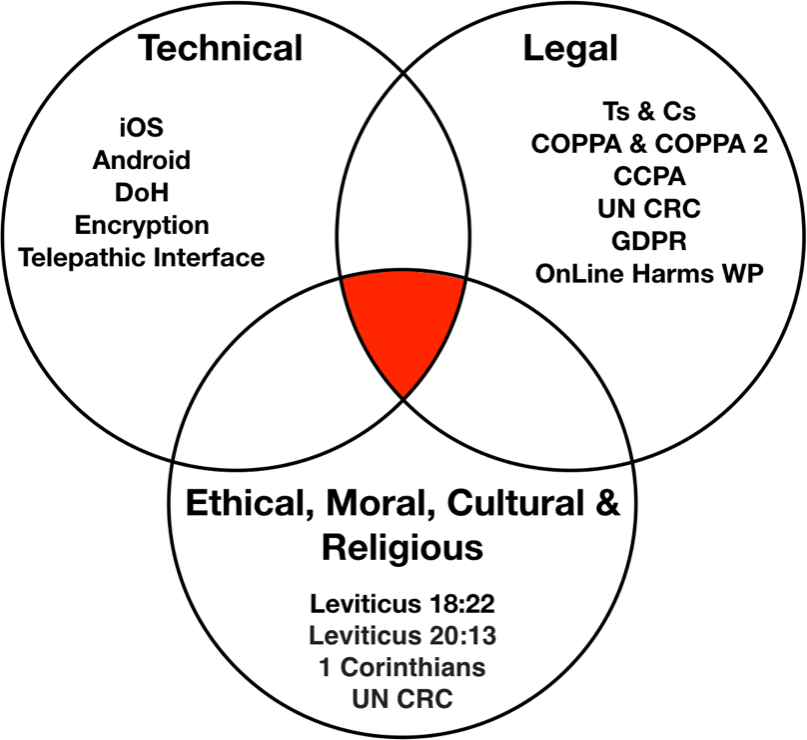

Our experience shows that safeguarding children in the global online digital context is at the intersection of technology, law, and ethics, culture & religion. It is at the centre of a vast Venn diagram, the interleaving circles of which are tectonic plates that push, squeeze, enable and disable online safeguarding capabilities.

In short, keeping children safe online is a complex thing to achieve, far more so than Cameron could have accounted for in his 2013 speech.

Take cyberbullying as an example. Cyberbullying isn’t illegal – it is regarded by many as a “legal harm”, indeed cyberbullying is listed in the Online Harms white paper as a “harm with a less clear definition”. There is no attempt to define it, yet the purpose of the Online Harms white paper is to pave the way to regulate against it. If there is no agreed definition, then regulation would seem impossible.

The Anti-Bullying Alliance does have a definition: “…the online repetitive, intentional hurting of one person or group by another person or group, where the relationship involves an imbalance of power that is carried out through the use of electronic media devices, such as computers, laptops, smartphones, tablets, or gaming consoles”.

But what does this actually mean? In of itself, this is not illegal behaviour. But is that really the case? What does “online repetitive, intentional hurting” mean? Does it mean the repeated sending of messages via a public communications network that are grossly offensive, indecent, obscene or menacing?

Because if it does, then that’s contravening Section 127 of the Communications Act 2003 and that is illegal. And if you’re a 10, 11, 12, 13 year old child on social media and receive grossly offensive, indecent, obscene or menacing messages from persons known or unknown every time you look at your smartphone, at any time of day, hundreds of times a day, what impact will that have on you?

How will the regulator regulate against children using grossly offensive, indecent, obscene or menacing messages on end-to-end encrypted social media services, even if such communications are clearly in breach of existing British communications regulation and especially when the operator of those services operates under a third country’s jurisdiction? How will they hold foreign-based directors of such companies to account for their platforms enabling the transmission of such messages, when the messages themselves are fully encrypted and unreadable by these companies? Isn’t that the point of end-to-end encryption?

Part of the SafeToNet Foundation’s charitable objectives as agreed with the Charities Commission is to educate and inform the public about this complex and dynamic topic. We do this by providing Advice & Guidance for parents and children from subject matter experts through our website www.safetonetfoundation.org, and by providing assessments of the risks to child safety that online apps and games present. We also produce an ongoing series of podcasts where we explore safeguarding in the online digital context with thought leaders and high profile practitioners from around the world. And we produce blog entries such as this one.

Despite research showing that social media usage creates depressive symptoms in its teenage users, and all the other online harms that children are subject to, social media is here to stay and children will continue to use it.

SafeToNet has also built into their AI-powered keyboard a range of mental wellbeing features that help children better manage their emotions, so that they can recognise the impact of online behaviours towards them from others and they are better equipped to deal with it.

If the British Government wants to use tech to help combat the problems caused by tech, tech for good, then it needs to find ways to work with, encourage, foster, nurture and promote these kind of innovations.

In this way, along with legislative powers, they can achieve their aim of making the UK the safest place for children to be online.